As large language models (LLMs) increasingly replace traditional search engines as tools for information gathering, the use of AI in the political arena—and its impact on elections—is inevitable. Recent research published in Nature and Science suggests that AI chatbots are not merely passive sources of election information; they can actively shape voter attitudes in measurable and durable ways.

Senate HELP Testimony on AI

I was honored to testify before the Senate HELP Committee, in my capacity as a Non-Resident Senior Fellow at AEI, on how AI can strengthen support for patients, workers, children, and families. It is no secret that I am an optimist about AI’s promise to expand opportunity and make learning more personal. But optimism must be matched with responsibility, including being honest about the emerging challenges and risks. As AI becomes more persuasive and more present in classrooms, we need to take seriously the risks of over-reliance, misalignment with educational goals, and emotional dependence on systems designed to imitate empathy.

When AI Feels Human: The Promise and Peril of Digital Empathy

A recent webinar with Julia Freeland Fisher, Ryan McBain, and Pat Pataranutaporn explored how AI is learning to simulate warmth, patience, and compassion—traits that make systems feel empathetic. These advances promise major benefits, including personalized tutoring, mental-health support, and reduced loneliness, but also raise concerns about dependency, weakened human bonds, and manipulative “addictive intelligence.” Experts urged designing AI that promotes human flourishing, measuring emotional and social well-being—not just accuracy—and enforcing transparency and child-safety standards. The central question: can AI empathy strengthen human connection rather than replace it?

Reading the Mind of the Machine: Why GPT-5’s Chain-of-Thought Monitoring Matters for AI Safety

OpenAI’s GPT-5 introduces a breakthrough in AI safety: real-time monitoring of its internal reasoning, or chain-of-thought (CoT). GPT-5 cut deceptive reasoning from 4.8% in earlier models to 2.1%, with 81% precision and 84% recall in flagging risks. Yet studies warn this interpretability may erode as models evolve toward opaque, machine-optimized reasoning. Both OpenAI and academic researchers urge treating CoT readability as a core safety metric—measured, reported, and preserved through training—to ensure humans retain visibility into how advanced AI systems reason, decide, and potentially deceive.

The AI Action Plan: Securing America’s Future in the Age of Intelligence

The White House’s 2025 AI Action Plan outlines an ambitious roadmap aimed at securing America’s global leadership in artificial intelligence through rapid innovation, strategic infrastructure, and assertive international engagement. Emphasizing open-source models, regulatory sandboxes, and workforce initiatives, the plan boldly contrasts previous approaches by accelerating deployment and competitiveness over precautionary regulation. However, critical gaps remain in AI interpretability, state-federal dynamics, copyright clarity, and execution specifics. The ultimate measure of success will depend not merely on technological advancement, but on ensuring responsible governance, maintaining public trust, and effectively managing AI’s profound risks alongside its transformative opportunities.

The Intelligence of Things Is Here—Reflections from Google I/O 2025

Google I/O 2025 underscored a pivotal shift toward the "Intelligence of Things," embedding advanced AI into everyday tools and devices, seamlessly integrating technology into daily life rather than creating isolated products. Google's vision emphasizes proactive, personalized AI, exemplified by Gemini 2.5’s deep research tools and immersive communication innovations like Beam and real-time multilingual translation in Google Meet. The event highlighted AI's transformative impact on education, notably through Project Astra's multimodal learning capabilities and LearnLM’s integration into Gemini, significantly enhancing pedagogical effectiveness. Central to this evolution is the critical role of agile public policy in facilitating safe innovation, ensuring AI technologies serve human needs by enhancing safety, productivity, and quality of life.

Agents, Access, and Advantage: Lessons from Meta’s LlamaCon

Meta was kind enough to extend an invitation for me to attend its inaugural LlamaCon—a one-day developer summit devoted to the Llama family of open-source large language models. It offered the chance to better understand the direction in which both the technology and its surrounding ecosystem are moving, and therefore merits a close read by anyone shaping AI strategy or policy.

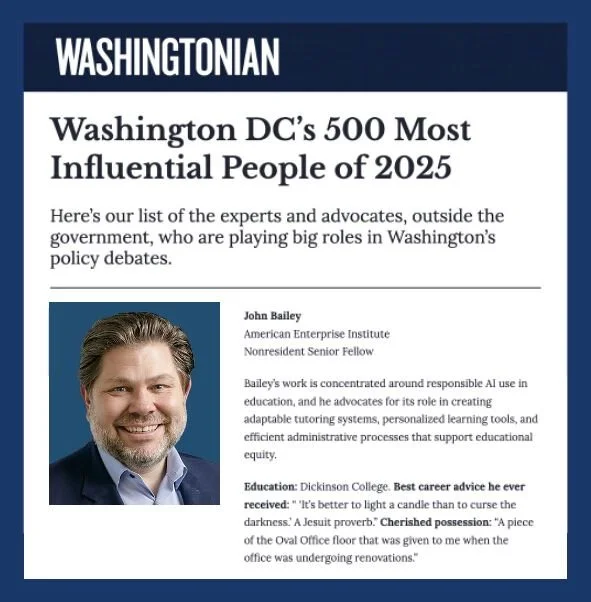

Honored to Be Included In Washingtonian Magazine's Most Influential People

It’s an honor to be named among Washingtonian Magazine's Most Influential People, alongside so many exceptional leaders whom I deeply admire, learn from, and have the privilege to work with.

The AI Race Accelerates: Key Insights from the 2025 AI Index Report

AI is advancing faster than anyone expected — and the 2025 AI Index Report proves it. From AI models matching Nobel-level intellect to a 280x drop in computing costs, Stanford’s latest report maps the jaw-dropping acceleration of AI capabilities. The U.S. still leads in AI models and private investment, but China is closing the quality gap—and fast. Meanwhile, AI-driven labs are already making major scientific breakthroughs, like designing nanobodies to fight COVID-19, and outperforming doctors in diagnostic accuracy.

Generative AI: The Emerging Coworker Transforming Teams

AI is transforming the workplace by enhancing collaboration, speeding up tasks, and expanding access to expertise. A Harvard-Wharton-P&G study found individuals using AI performed as well as human teams, and AI-assisted teams were nearly three times more effective. AI also helped less experienced employees contribute more and encouraged interdisciplinary thinking. Pennsylvania’s ChatGPT pilot showed similar benefits, with employees saving 95 minutes daily and improving workflows. A national survey by JFF found that 57% of workers already see AI reshaping their jobs, though few receive formal training. These findings suggest AI isn’t just a tool for efficiency—it’s a partner reshaping how teams work, learn, and innovate.

Why Your Next Coworker Might Be an AI Agent

In 2025, your next coworker might not be human, but an autonomous AI agent capable of performing specialized, real-world tasks collaboratively and independently. Groundbreaking experiments from Google, Stanford, and Chan Zuckerberg BioHub reveal that teams of AI agents—ranging from computational biologists to critical reviewers—can rapidly innovate, discover new medical treatments, and respond dynamically to emerging. As AI becomes embedded into workplace dynamics, businesses face an exciting yet challenging future, where managing AI agents as digital employees might soon become as common as managing human teams.

Class Disrupted: How AI is Democratizing Access to Expertise in Education

I had the great joy and privilege of joining Michael Horn and Diane Tavenner for an episode of Class Disrupted. We explore AI’s transformative potential in education, particularly its ability to democratize access to expertise—shifting from just providing information to offering expert-level insights at near-zero cost. I discuss how AI can serve as a teaching assistant, tutor, and even a consultant for educators, while also weighing the risks, from amplifying biases to reshaping workforce skills. The conversation unpacks AI’s broader implications, from labor markets to education policy, and considers how schools might—or might not—adapt to these rapid changes.

Lessons from China’s DeepSeek: A Wake-Up Call for AI Innovation

DeepSeek's R1 model from China has sent shockwaves through the AI industry, matching OpenAI's top models while claiming development costs of just $5 million. Though experts dispute this figure, R1's emergence has triggered market value losses and security concerns. US companies quickly responded, with Google updating Gemini to outperform R1. The situation highlights tensions around export controls, security vulnerabilities, and the strategic importance of open-source AI development that aligns with democratic values. Security risks have led several US entities to ban DeepSeek. America must accelerate innovation and education to maintain AI leadership.

My AI Advisers: Lessons from a Year of Expert Digital Assistants

AI has become an invaluable partner for me, giving me instant access to a wide array of expert “assistants.” I now have a data analyst, driver with Waymo, brainstorming partner, legislative analyst, medical assistant, start-up advisor, graphics designer, and researcher at my fingertips, ready to help whenever I need specialized skills and knowledge.

America Can’t Afford to Lose the High-Skilled Talent Race in Today’s Competitive Markets

A recent social media clash that erupted between Elon Musk, Vivek Ramaswamy and Trump loyalists over high-skilled immigration reform exposed deep ideological rifts within the Republican coalition. But the importance of the debate over immigration policy and the American education system extends far beyond social media — solving these problems is critical to America’s competitiveness. By combining pragmatic immigration reforms, bold educational investments and innovative AI-driven learning, we can forge the “Talent Dominance” agenda we desperately need.

AI Tutors: Hype or Hope for Education?

In an analysis of Sal Khan's "Brave New Words" and the evolving landscape of AI in education, I present a case for AI-powered tutoring as a transformative force. Recent advancements in speech, image analysis, and emotional intelligence, combined with promising research studies showing significant learning gains, suggest AI tutoring could help address our urgent educational challenges like pandemic learning loss and chronic absenteeism.

Appointed to Virginia's AI Task Force

I’m deeply honored to be appointed by Governor Youngkin to serve on Virginia’s inaugural AI Task Force. This distinguished group of leaders from academia, nonprofits, and industry will advise policymakers on harnessing AI to transform government services, streamline regulations, and position Virginia as a leader in responsible AI innovation. As we unlock AI’s potential to improve efficiency and reduce burdens on state agencies, we must also ensure thoughtful safeguards to protect privacy, fairness, and public trust. I

Why the Veto of California Senate Bill 1047 Could Lead to Safer AI Policies

Gov. Gavin Newsom’s recent veto of California’s Senate Bill (SB) 1047, known as the Safe and Secure Innovation for Frontier Artificial Intelligence Models Act, reignited the debate about how best to regulate the rapidly evolving field of artificial intelligence. Newsom’s veto illustrates a cautious approach to regulating a new technology and could pave the way for more pragmatic AI safety policies.

The Deception Dilemma: When AI Misleads

An emerging body of research suggests that large language models (LLMs) can “deceive” humans by offering fabricated explanations for their behavior or concealing the truth of their actions from human users. The implications are worrisome, particularly because researchers do not fully understand why or when LLMs engage in this behavior.

The Promise and Limitations of AI in Education: A Nuanced Look at Emerging Research

New research reveals the transformative potential and complex realities of AI in education. From AI-powered grading systems that match human accuracy while saving countless hours, to AI tutors that both enhance and hinder learning, to AI's ability to reason like humans and predict social science experiments, these studies paint a nuanced picture of AI's role in the future of education. As the field rapidly evolves, enthusiasts and skeptics alike must grapple with the profound implications of AI for teaching and learning and ensure their views are shaped by research.