Published on AEI Ideas

Open AI Models: A Step Toward Innovation or a Threat to Security?

As AI models become more advanced, the conversation around their accessibility has intensified. At the heart of this debate is a crucial question: Should the “weights” of large language models be openly accessible to the public, or should they be closely guarded to prevent misuse?

In the realm of AI, especially deep neural networks, weights are the core elements that define a model’s intelligence. These numerical values govern the connections between neurons in a neural network, determining how the model processes information and makes predictions. Through training, these weights are fine-tuned, reflecting the knowledge and capabilities that the model has acquired. Because of their importance, weights are at the center of discussions about the future of AI—discussions that touch on innovation, security, and the democratization of advanced capabilities.

Critics argue that open-weight AI models pose significant risks, as they make advanced AI publicly accessible. Potential threats include using AI for disinformation campaigns and enhanced cyber-attacks. There is also a risk of state and non-state adversaries utilizing open AI models to develop military capabilities. Such applications were once limited to major powers but could become more widespread if AI models are freely available.

Proponents of open-weight models argue that transparency and accessibility are crucial for accelerating AI research and innovation. By releasing model weights publicly, companies like Meta and Mistral enable faster research and collaboration, leading to more robust and trustworthy AI systems. This openness also allows for greater scrutiny, enhancing safety and security by identifying and mitigating potential flaws or biases. Open-weight models also democratize AI capabilities, making them accessible to a broader audience, including small businesses, researchers, nonprofits, government agencies, and developers.

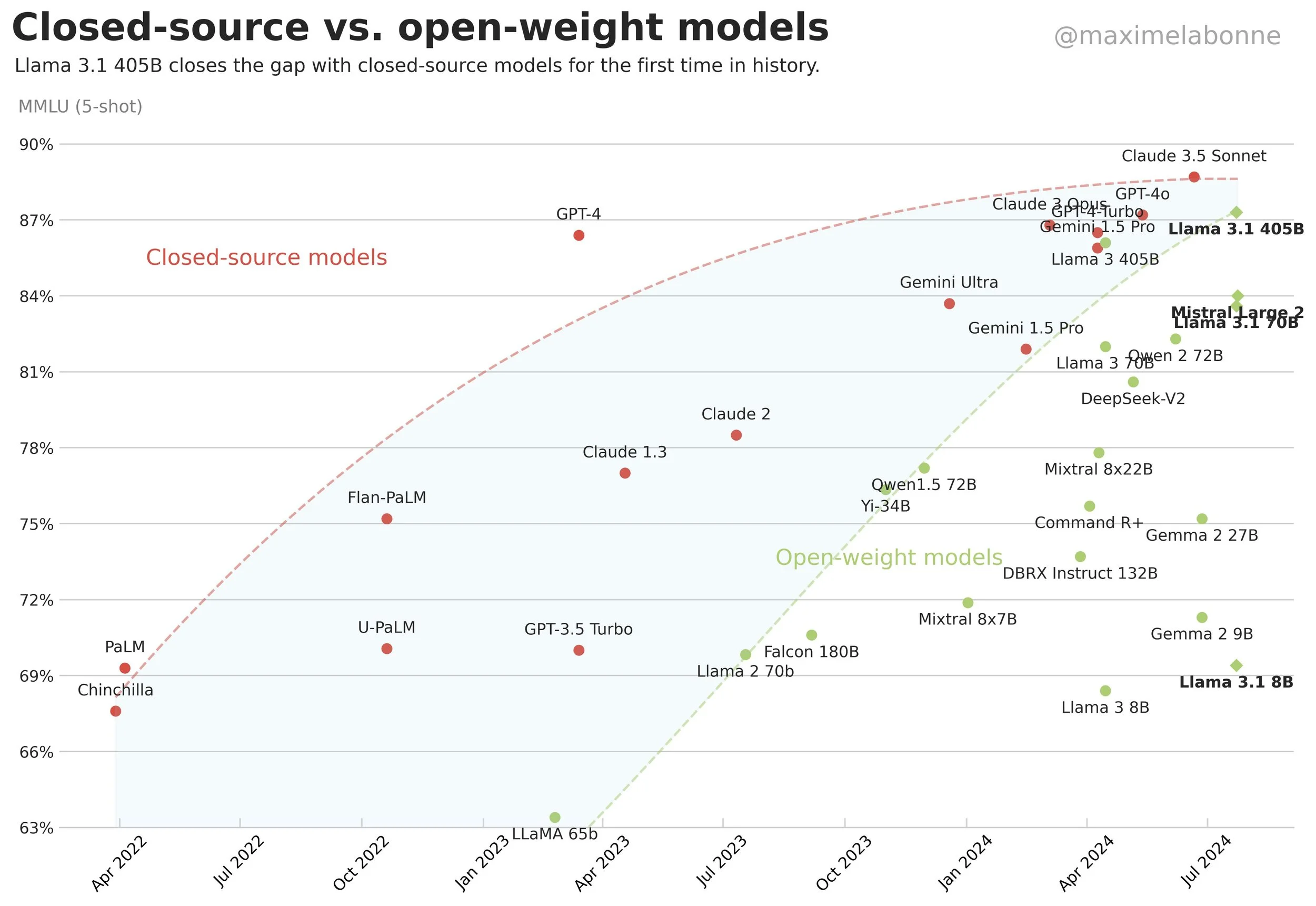

Recent developments have brought this debate into sharper focus. Meta released Llama 3.1, a model with 405-billion-parameters, and Mistral introduced Large 2. These are the first frontier-level open-weight models to achieve state-of-the-art benchmarks.

Mark Zuckerberg wrote an important letter accompanying Llama 3.1’s release that discussed why open-weight models are beneficial not only for developers and Meta but also for the world. He emphasized that organizations have diverse AI needs: Some require small, on-device models for classification tasks, while others need larger models for complex operations. Unlike their closed counterparts, these open-weight models are available for download, allowing developers to fully customize and fine-tune them according to their specific needs. They also offer flexibility, independence, and enhanced security, as organizations can run AI wherever they choose. This addresses concerns about data privacy, security, and long-term reliability.

Zuckerberg also discussed the national security concerns. He argued that the United States’ strength lies in decentralized and open innovation. While some fear that open models could fall into the hands of adversaries like China, he contended that closing off these models would likely disadvantage the US and its allies more than it would protect them. Instead, he advocated building a robust open ecosystem, with leading companies working closely with the government and allies to maintain a sustainable first-mover advantage.

This last part is crucial as China is actively open sourcing many of its AI technologies. By restricting open AI development closer to the frontier, the US may inadvertently hinder its own innovation and competitiveness, ultimately pushing more countries to adopt Chinese AI standards and tools that will not align with liberal democratic values. OpenAI CEO Sam Altman raised a similar point in a recent op-ed:

Making sure open-sourced models are readily available to developers in those nations will further bolster our advantage. The challenge of who will lead on AI is not just about exporting technology, it’s about exporting the values that the technology upholds.

In parallel to these industry developments, the US Commerce Department’s National Telecommunications and Information Administration (NTIA) released a report that concluded that there is not enough evidence to “definitively determine either that restrictions on such open-weight models are warranted, or that restrictions will never be appropriate in the future.” Instead, NTIA recommends that the federal government collect and evaluate evidence through standards, audits, and research.

In a post-Chevron world, it’s unclear whether federal agencies have the authority to enforce audits or restrict open-weight models. Without clear authority, agencies should proceed with caution. Policymakers must weigh the potential risks of these models against the risks of premature or excessively burdensome regulations, which could hinder the democratization of AI in key fields like education, healthcare, and government services. Instead, the US should foster more AI innovation and build a robust ecosystem with leading companies, government, and allies to maintain a sustainable first-mover advantage and ensure that open-sourced models are available to developers in allied nations, promoting US values while empowering global partners to harness the benefits of emerging technologies.